Assessment Design & Generative Artificial Intelligence

1) The tension between testing & AI

The increasing availability of generative AI applications presents teachers with several challenges when designing examinations and examination tasks:

- Examinations and examination tasks should meet contemporary trends in Higher Education assessment.

- There is a possibility that students will use AI applications to cheat and plagiarise.

- Due to the strong heterogeneity that exists among the student body in terms of knowledge and skills levels and, above all, access to AI technologies, it is difficult for teachers to ensure examination fairness.

The aforementioned challenges cannot only be solved at the level of individual teaching staff and courses, but sometimes require a university-wide strategy. Nevertheless, it is up to lecturers to incorporate artificial intelligence into the design of performance assessments and examinations and to make make didactically sensible decisions. The information on this page should serve as a guide.

2) Possibilities for dealing with AI in the context of examinations

When designing exams, teachers can consider AI technologies in the following forms (see Stony Brook University Center for Excellence in Learning and Teaching, 2024):

Ignoring or banning AI technologies are theoretically conceivable scenarios, but in practice it does not make sense to take this approach. Ignoring paves the way for students to use generative artificial intelligence to solve learning and examination tasks. A general, unreflected ban does not do justice to a contemporary assessment culture. Artificial intelligence is part of the reality of all our lives and as a matter of fact, students use AI tools for their learning and work processes. Teachers should thus develop constructive strategies for dealing with AI technologies in teaching and not turn a blind eye to them.

The deliberate omission of AI in examinations means that the examination content and/or modalities are designed in such a way that the use of AI tools is not necessary or not possible. Although AI is taken into account when designing, the exam is designed "around the AI", so to speak.

Invigilating is about ensuring that the possible use of AI tools does not occur in the first place or only to a limited extent, for example by making no or only certain AI systems accessible during digital audits.

When integrating, the use of AI systems is permitted or even necessary during the audit in order to solve certain tasks. The degree of AI utilisation can take different forms.

The approach of adapting refers to a rethinking of examinations, examination formats and performance assessment concepts in the face of AI. While the approaches described above assume that the traditional examination modalities remain in place and AI is either integrated or circumvented in various forms, the concept of adaptation includes an innovative component that brings about real changes in examinations (both for the instructor and for the students). This is usually done by opting for alternative examination formats that have not yet been used in a subject area or course. Artificial intelligence can be integrated or omitted, depending on what is didactically sound.

3) Testing with or without AI?

Based on the options presented above for countering AI in exam design, two forms of exam design can be derived:

- Exam design without AI (ignore/prohibit, design around, invigilate) and

- Exam design with AI (embrace, adapt).

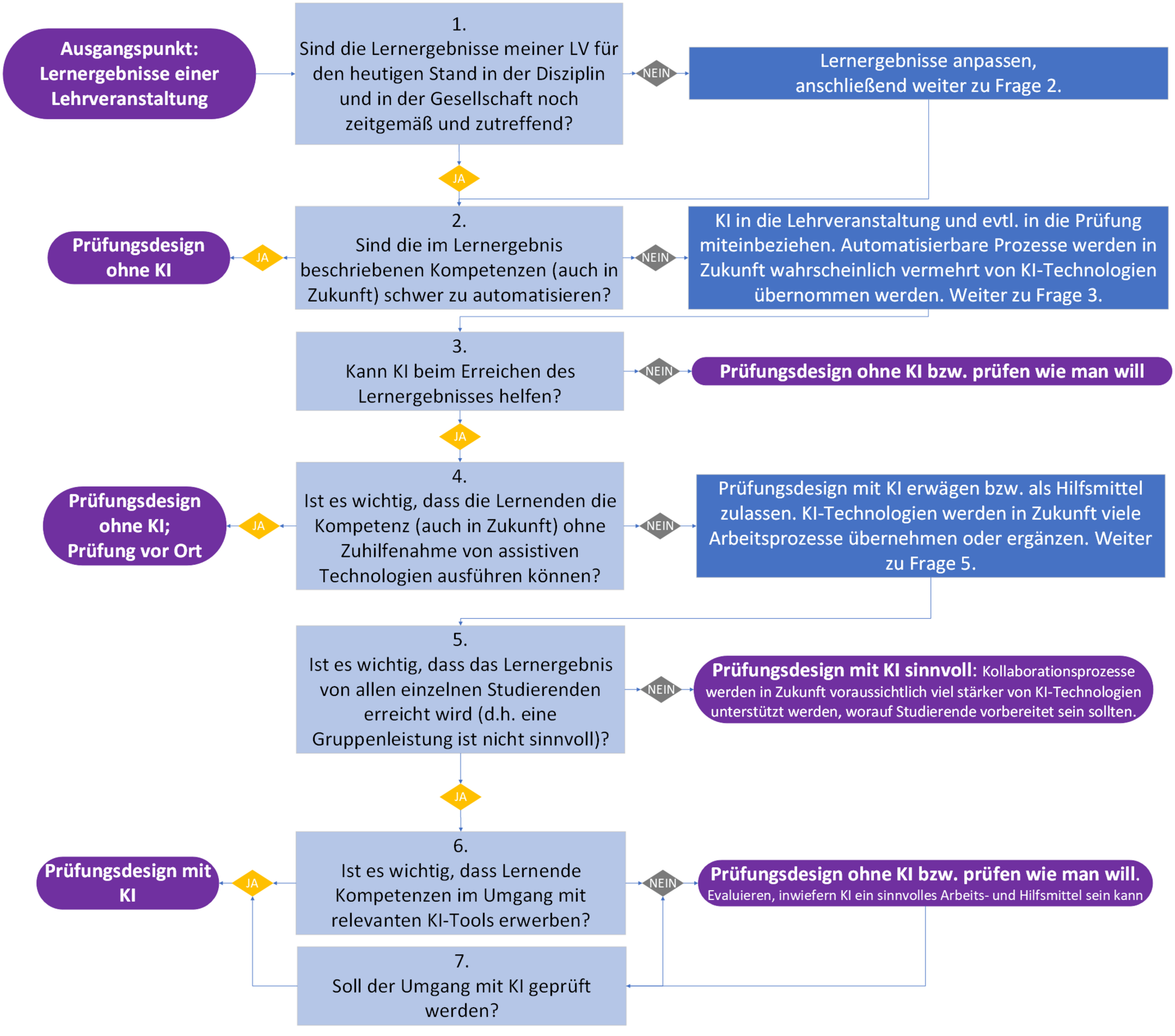

Which approach is preferable for your own teaching and learning settings can be determined by analysing learning outcomes. The following is a decision-making aid for doing so (in German, based on Monash University AI in Education Learning Circle, 2024 & Hanke, 2023):

CC-BY-SA University of Graz/Beatrice Kogler

4) Assessment techniques and tasks for both exam design approaches

Analysing the learning outcomes provides an indication of whether or not students should work with generative artificial intelligence in examinations. The examination design approaches are implemented by selecting suitable assessment techniques and setting didactically meaningful tasks. We can differentiate between

(Kopie 7)

Targeted work with generative AI applications is already useful in some teaching and learning contexts. The degree of integration and utilisation of AI tools can vary and take the following forms (cf. Perkins et al., 2024):

- AI-supported idea generation and structuring: generative AI can be used in examinations for brainstorming and for generating ideas and outlines. AI-based literature research is also categorised as this form of AI use. AI-generated content is not permitted as a submission.

- AI-supported editing: AI can be used to improve the quality of the student work produced. No new content may be created and submitted using generative artificial intelligence. Examples of this scenario would be the use of writing assistants to improve spelling/grammar/word choice and tools for image editing.

- Guided AI-supported task processing: AI can or must be used to process tasks or subtasks. The AI-generated output is then processed by the learners, i.e. evaluated, discussed, commented on, corrected, etc. The comparison of AI-generated and human-generated content could also be a task. Teachers assess the critical analysis of the AI-generated content and/or the formulated prompts.

- Unrestricted AI-supported task processing: Generative AI is used like a co-pilot to process tasks and improve work results. Testing and comparing different generative AI tools can also be at the centre of the task. Teachers can specify the tools to be used or leave the choice up to the students. The work result of the human-AI collaboration (and thus implicitly the use of AI tools and AI-generated output) is assessed. Examples of this scenario would be the AI-supported creation of written work, media and designs, the writing of software code or the AI-supported completion of project work.

If AI-integrated examination tasks are to be used, fundamental prerequisites must be created before the examination is carried out in order to ensure that the examination is fair.

- On the one hand, it must be ensured that all candidates have access to the necessary and useful AI tools during the examination.

- Beforehand, students must be given the opportunity to learn how to use generative AI in general and the AI tools that are to be used in the exam in particular. This in turn requires the necessary knowledge and skills on the part of the lecturers in order to prepare students for the use of AI in the best possible way.

- Teachers must make it clear before the start of the course how and to what extent the use of generative artificial intelligence will be included in the performance assessment. For example, they can use the text modules provided by the University of Graz text modules provided by the University of Graz for an AI statement in the course description.

- Guidelines on the use and of AI tools, to maintain academic integrity/good scientific practice, for labelling the use of generative AI technologies and ethical implications should be communicated and made available to students.

So-called AI-robust assessment techniques and tasks make it difficult for students to use generative artificial intelligence directly to solve tasks. According to Williams, 2023; Charles Stuart University, 2024; Ifelebuege, 2023; Jochim/Lenz-Kesekamp, 2023; and Lee, 2023 such assessment techniques are:

- Proctored exams/closed-book exams

- Oral examinations

- Oral performances such as (poster) presentations, papers, corrected papers

- Practical examinations/demonstrations on site

- Application and transfer tasks

- Reflection tasks on practical experiences or tasks

- Portfolios

- Process documentation

- Group/project work

- Peer assessment

- Production of media and other artefacts

- Problem-based tasks/case studies

The examination formats listed above have in common that they can serve the higher taxonomy levels according to Anderson and Krathwohl (2001) and thus enable competence-orientated testing. By working together with others, learners can also develop personal and social skills. However, there are limitations to an examination design without AI, because

- traditional proctored exams, which mainly use closed questions (multiple and single-choice questions), are not suitable for competency-based testing if the primary focus is on memorising and understanding information.

- oral examinations require a lot of resources and are less objective and reliable.

- many of the examination formats listed above do not rule out the use of generative artificial intelligence, e.g. as a formulation aid for reflection tasks, documentation or portfolio work or for generating texts for presentations.

- generative AI would be a useful and practical tool for some of the formats listed, such as for supporting/organising group and project work or for media production.

The choice of examination modalities should be made with this in mind and with regard to the learning outcomes.

In the following assessment techniques and tasks, it is very easy for generative artificial intelligence to generate suitable answers and suggested solutions or texts that students could use to attempt to cheat. For this reason, they are labelled "AI-unsafe" in the literature (cf. Becker et al., 2023; Charles Stuart University, 2024; Ifelebuege, 2023; Nikolic et al., 2023; Williams, 2023):

- Written (final) papers

- Essays, papers and other short text forms

- Unsupervised online tests and quizzes

- Unproctored multiple-choice exams

- Unsupervised open-book examinations

- Asynchronous examinations, e.g. online tasks in the learning management system

If, due to the learning outcomes, it is important that learners can develop and demonstrate the defined competences completely independently and without the help of AI tools, the examination formats mentioned are not suitable. However, examination formats b) to f) could support the learning process as unassessed practice and self-assessment options.

One suggestion to make the listed examination formats more "AI-safe" is to combine them with "AI-robust" examination tasks. Possible suggestions include questioning students after solving (online) tasks, an oral presentation of written content, the creation of process documentation and/or final discussions in combination with written assignments or keeping a learning diary throughout the course. The examinations that are categorised as "AI-robuster" could subsequently be weighted more heavily in the assessment.

It should be borne in mind that expanding the performance assessment concept to include further partial performances increases the amount of work and resources required for both lecturers and students. Keep an eye on the workload for the students and weigh up whether you have enough time resources in the course to take further examinations.

If the use of generative artificial intelligence is desired or even necessary to achieve the learning outcomes, the examination formats listed are possible. However, the form and extent of use of generative artificial intelligence and the form of documentation must of course be defined and communicated in advance.

5) Testing & AI: general recommendations

Current discourses on the topic of testing and AI (cf. in particular Ifelebuegu, 2023; Jochim & Lenz-Kesekamp, 2023; Nikolic et al., 2023, Williams, 2023) suggest the following pedagogical recommendations for teachers:

6) Summary

Even if the already complex task of testing is made even more challenging by the increasing availability of generative artificial intelligence, the basic didactic approach remains the same. The starting point for exam design is and remains the learning outcomes. Based on these, teachers can first determine the relevance of generative artificial intelligence in the course and then evaluate whether the examination should exclude generative artificial intelligence (examination design without AI) or integrate it to varying degrees (examination design with AI). Different examination formats are differently suited to implementing these two approaches.

For the moment, the goal for teachers can be an authentic examination design that confronts students with realistic, competence-orientated tasks of the 21st century in line with the future skills approach. These should mainly require students to demonstrate problem-solving skills, critical thinking and the competences acquired in the course. Depending on the learning outcomes and framework conditions, generative artificial intelligence can be used to varying degrees and can be well supervised by the teacher.